Hyperspectral imaging for satellites and space exploration vehicles

From visible to SWIR, and beyond

A fleet of miniaturized satellites will soon circle the earth, while robotic vehicles are exploring the other planets. By taking hyperspectral sensing on board, they can reveal what happens in and on its surface.

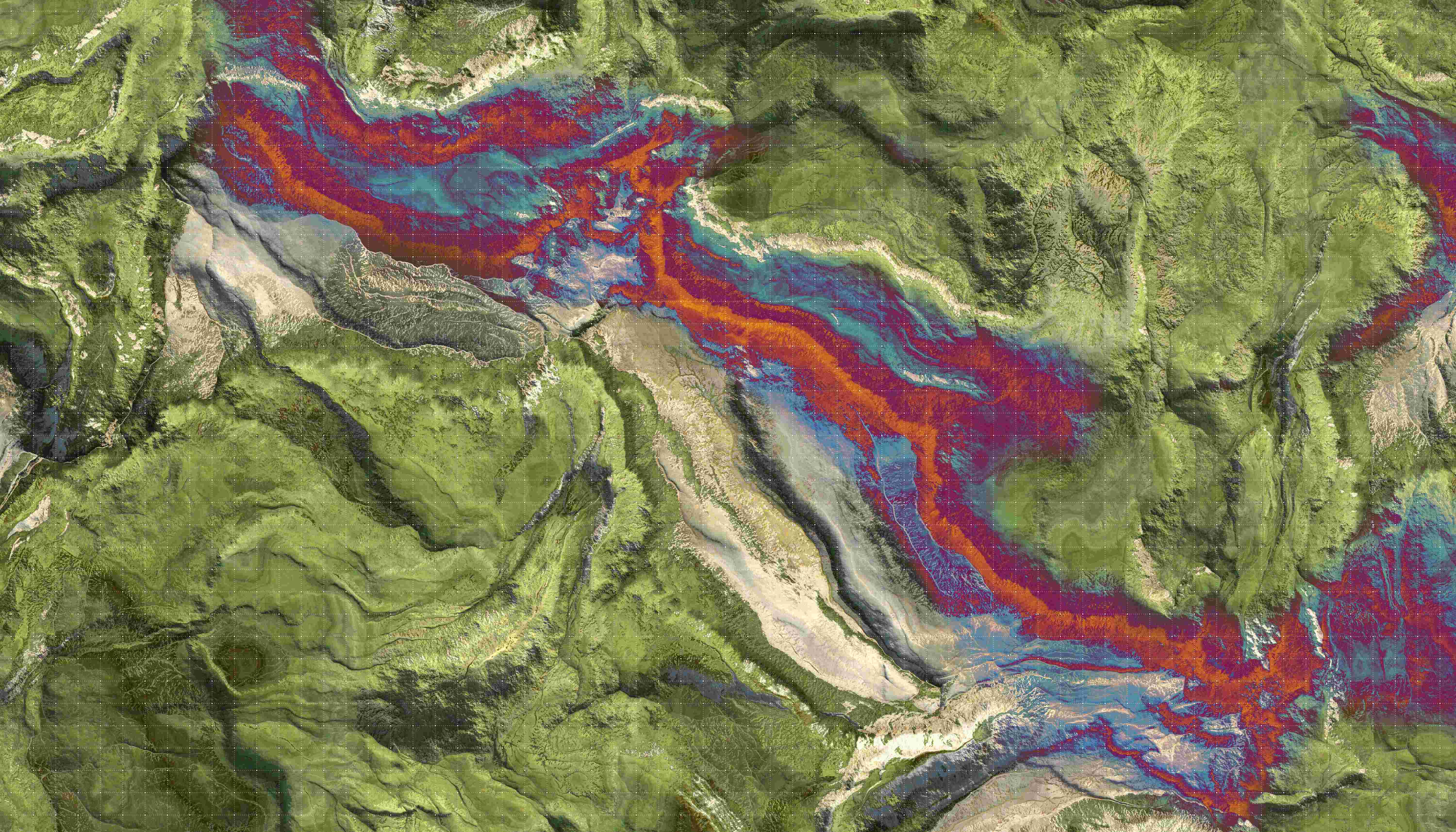

Which parts of a mountain range are teeming with valuable metals? Where are the islands of waste plastic in our oceans? What’s the moisture level of a piece of land after a heat wave?

All that information – and more – can be obtained through hyperspectral remote sensing.

By equipping a satellite or space rover with hyperspectral imaging capabilities, you’re able to see the big picture, in sharp detail. Each pixel contains a complete breakdown of visible and invisible frequencies of the reflected light. Revealing the spectral signatures of minerals, soil, vegetation, ...

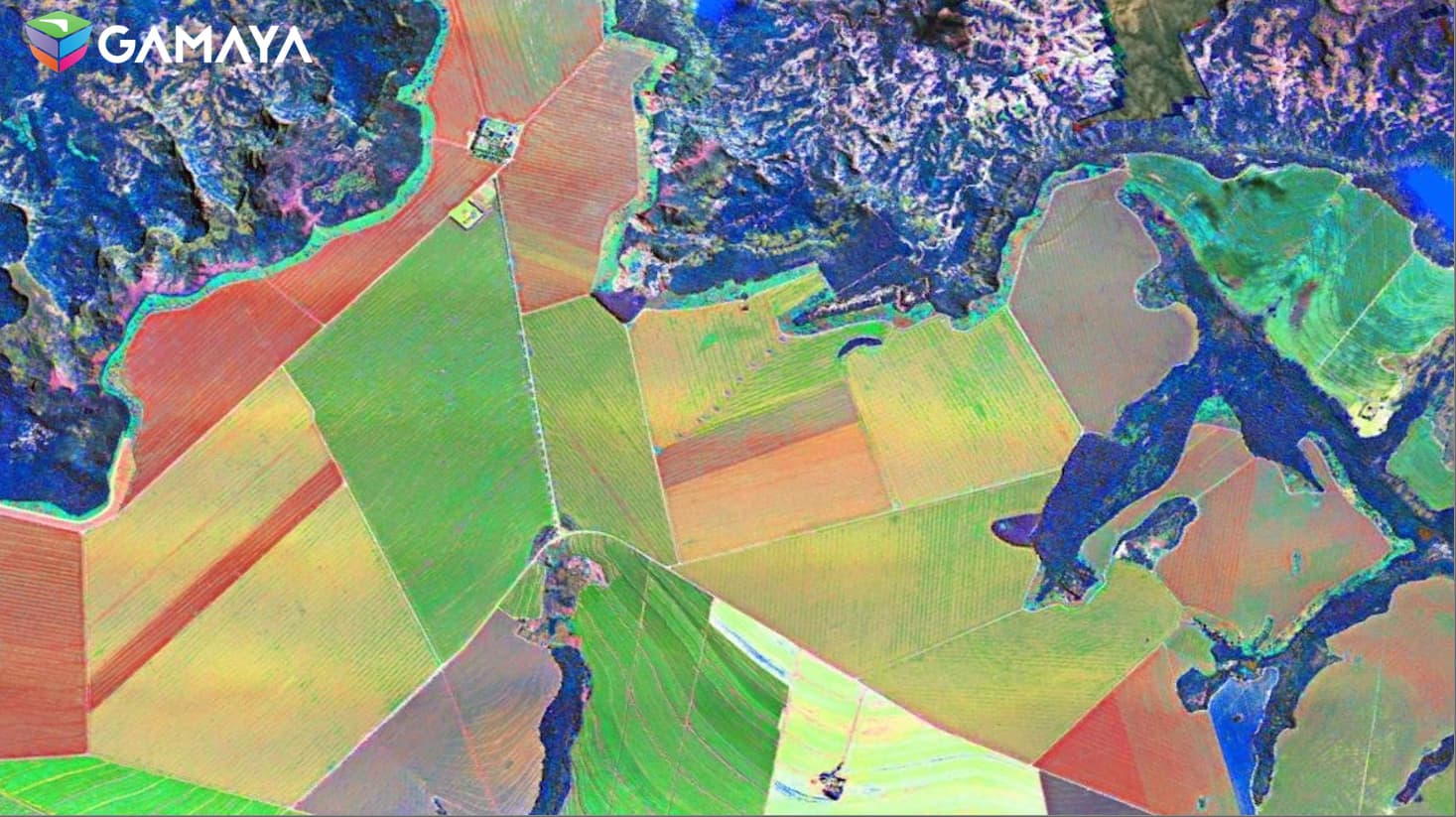

Hyperspectral aerial image of large test fields of soybean cultures in Brazil. The color image is augmented with spectral features to show specific variations within crop cultures (e.g. disease patterns) – Courtesy of GAMAYA.

Applications of hyperspectral remote sensing range from precision agriculture to geology, environmental monitoring and archaeology.

Hyperspectral remote sensing for miniaturized satellites

Until recently, hyperspectral remote sensing suffered from low temporal resolution: images were taken one or two weeks apart – the time that a big satellite needs to complete its trajectory. For many applications, like agriculture, that frequency was too low to fully realize the benefits.

That’s why the advent of low-cost miniaturized satellites – so-called CubeSats – promises to be a game-changer for hyperspectral remote sensing. They allow for the development of a constellation of satellites that scan the same location almost daily.

Hyperspectral imaging for space exploration vehicles

Spectral imaging can be used to empower space explorers and robotic missions with exceptional capabilities. The possibilities for opening new horizons in space research range from crafting intricate maps to analyzing planetary atmospheres, identifying valuable minerals, and exploring potential habitats for life.

Specific challenges in space

In space, and especially for small satellites and space rovers, constraints are different than on-ground:

- The payload must be extremely compact and light to save costs.

- Because the lighting varies, the sensor must also operate in low-light conditions.

- Satellites scan the earth while orbiting, and the smaller the satellite, the higher its instability. Instability is also an issue for space rovers, scanning the rough surface of a planet.

Those conditions are difficult to meet with grating-based cameras. They’re bulky. They suffer from low signal-to-noise ratio when the lighting is low. And because they require images to be reconstructed line by line, instability greatly reduces their ability to reconstruct images.

Watch this webinar to learn more about the data-processing pipeline in imec's hyperspectral software, which enables you to acquire reliable spectral images and videos in real-life circumstances.

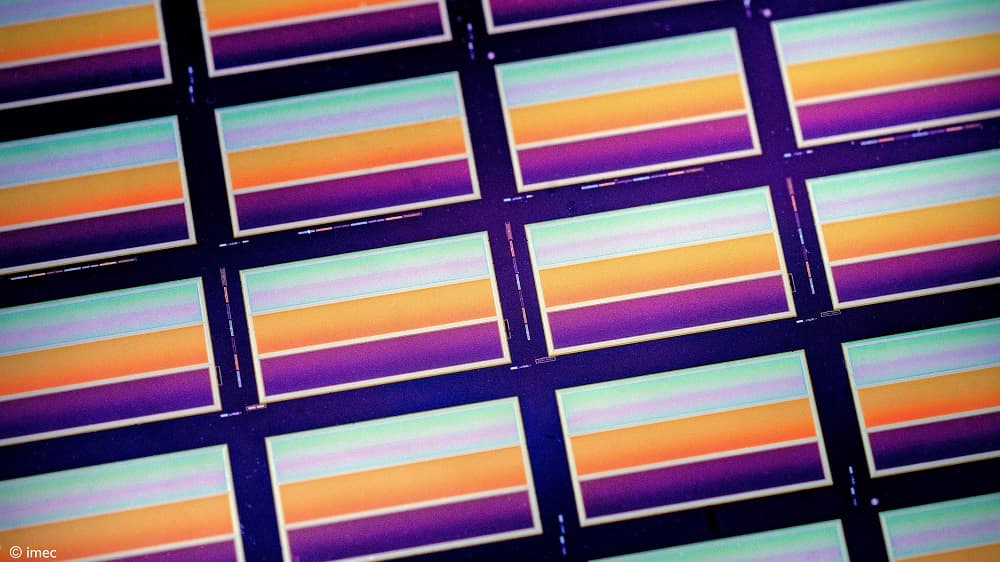

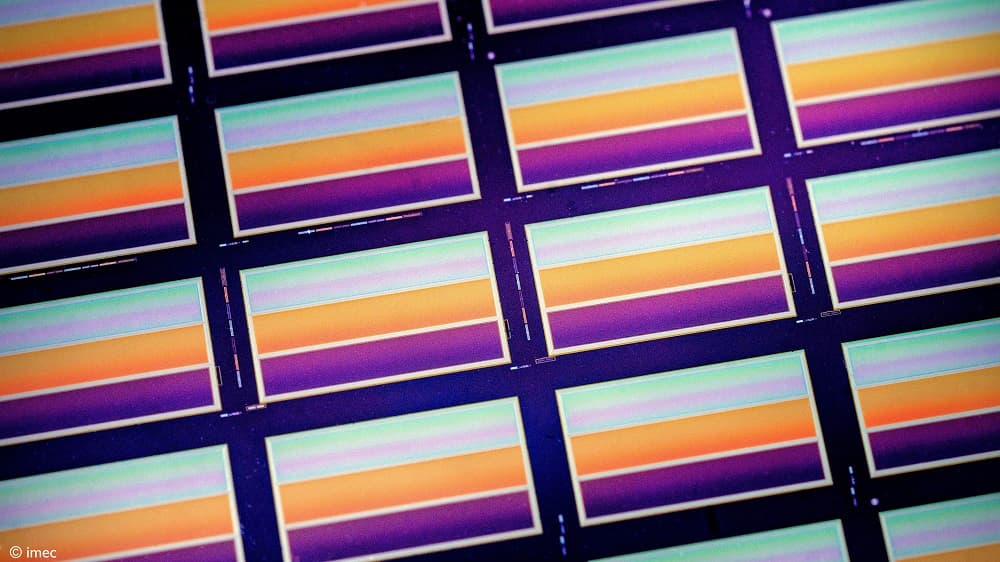

On-chip SWIR snapshot and push-frame sensors for hyperspectral satellites and space exploration vehicles

As a leading R&D center for semiconductor technology, imec can deposit and pattern spectral filters on the surface of area-scan image sensors. That makes it possible to create push-frame hyperspectral sensors that relax the constraints on the line-of-sight pointing accuracy in small satellites.

By depositing exactly the same spectral filter on adjacent rows of pixels, that patterning capability is also used to increase the signal-to-noise ratio of the image with TDI functionality.

In an alternative configuration, it’s possible to combine push-frame hyperspectral imaging with snapshot multispectral imaging. This results in the potential to do video imaging from space – even in low-earth orbit.

And as space environments are characterized by limited data communication options, the snapshot capability of our spectral technology also proves invaluable at ensuring efficient data acquisition and analysis for successful missions.

Last but not least: with spectral filters operating in the SWIR spectrum, combined with an off-the-shelf InGaAS detector, imec broadens spectral imaging further than the visible and NIR spectrum.

All this makes these types of sensors ideal for use in satellites and high-altitude drones, for the classification of agricultural soils, minerals, plastic pollution, surveillance ... as well as for space exploration vehicles. With imec's current off-the-shelf sensors, you can evaluate the technology for these use cases before you go to a space-grade sensing solution.

Imec's multi- and hyperspectral imaging sensor for space applications

Want to use hyperspectral remote sensing?

Imec has a long history of applying its innovative technologies to research in and from space. Our hyperspectral imaging sensors were already used in the CHIEM project that developed a novel compact hyperspectral imager – compatible with a 12U CubeSat satellite.

Contact us if you want to use our sensors for your satellite projects. Our off-the-shelf sensors, which have not been designed for remote sensing and are not space-qualified, can be used for validation. And we're happy to help you with the custom development of your sensor or system.

Publications

Videos

Live demonstration: flying a hyperspectral SNAPSHOT drone camera

Using hyperspectral imaging in a drone is challenging:

- Integration in the drone may require a combination of many sensors.

- For good data output, push-broom cameras require a controlled environment, or multiple attempts, and a crew of several people.

From imec’s demo studio we show how imec’s newest snapshot drone camera can be connected to a DJI M600Pro drone and controlled remotely with live streaming of data. A stitched hypercube illustrates how the pre-processing pipeline works to reduce the amount of time spent by researchers before doing their analysis.

Introduction and demonstration: VIS-SWIR low swap-c spectral imaging for remote sensing

Spectral imaging has matured over the last five years. Now the technology progressively moves from the lab to real-life use cases. Watch this presentation to explore how imec drives new remote sensing applications. The 60-minute talk includes a live demonstration of the SNAPSHOT SWIR camera to illustrate how imec's spectral imaging is implemented in practice.

Contact our business development team.